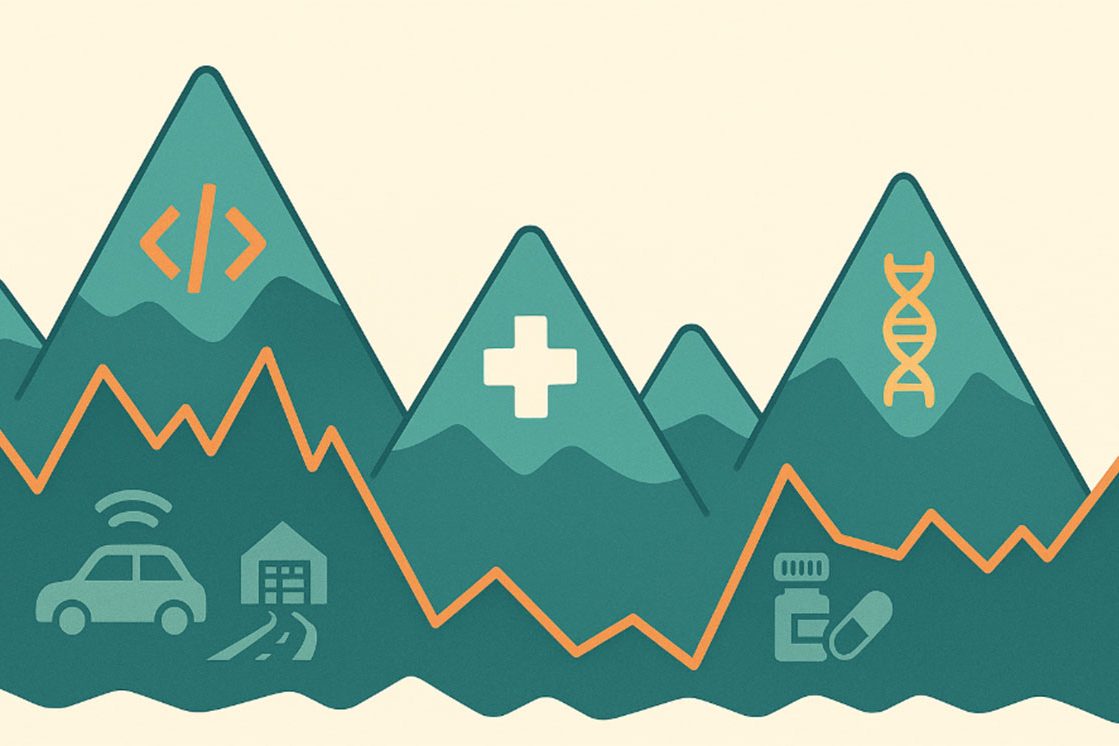

Why some tasks feel like sci-fi and others still act like dial-up

AI progress isn’t a smooth upward curve; it’s a jagged skyline. One discipline races ahead, another stalls, and a few keep surprising even the optimists. Understanding those gaps is the difference between shipping a game-changing product and burning budget on promises the math can’t keep…yet.

Peaks of Superhuman Performance

1. Code & Contracts

Large-language models (LLMs) now translate Ruby into Go, refactor legacy COBOL, and draft NDAs in seconds. They live in a world of text-rich, rule-bound data where success is easy to score: does the code compile, does the clause match precedent? With feedback loops that tight, models sprint. Read more.

2. Medical Images

Computer-vision nets spot diabetic retinopathy and melanoma with sensitivity rivaling specialists. Millions of labeled images plus binary ground truth give the algorithms a crystal-clear scoreboard. Read more.

3. Protein Structure

DeepMind’s AlphaFold turned a 50-year scientific riddle into a solved checkbox by leveraging vast public protein datasets and well-defined physics constraints. When the rules of the game are explicit, AI can finish years ahead of schedule.

Valleys Where Reality Bites

1. Supply-Chain Forecasting

Hand an LLM a multi-tier inventory puzzle and it may hallucinate a phantom shipping lane, or silently apply yesterday’s demand spike to next year’s planning horizon. Ground truth is sparse, error tolerance microscopic, and the test set shifts every economic quarter.

2. Autonomous Driving

Vision models nail lane detection in perfect daylight but still confuse oddly angled cardboard for a concrete block. The long-tail of corner cases is enormous, and the cost of a single false negative is measured in lawsuits, not click-through rates. Read more.

3. Drug Discovery

Predicting protein folds is half the battle; translating that insight into safe, effective molecules collides with messy biology, limited trial data, and an FDA that doesn’t grade on a curve. Billions flow in, hit rates creep up by single digits.

Why the Gaps Exist

| Factor | Favors Rapid Progress | Slows Things Down |

|---|---|---|

| Data Abundance | GitHub, PubMed images | Rare failure modes, proprietary logs |

| Label Clarity | “Compiles / Doesn’t” | “Looks safe in mice… maybe” |

| Error Tolerance | Typos → minor | 2 % miss → product recall |

| Feedback Loop Speed | Seconds to verify code | Years for clinical trials |

| Regulatory Overhang | Minimal for chatbots | Heavy for roads & drugs |

When three or more slow-down factors stack, AI drags its feet no matter how many GPUs you throw at it.

Calibrating Your Ambition

-

Audit the Data Stockpile

Do you have millions of clean, labeled examples or forty spreadsheets with competing definitions? Investment decisions start here. -

Quantify the Cost of a Miss

A chatbot typo harms brand polish; a mis-classified lesion harms a patient. Your acceptable error rate should adjust accordingly. -

Tighten the Feedback Loop

Even high-stakes domains can de-risk progress with simulated environments, synthetic data, or staged rollouts that surface mistakes before regulators do. -

Layer in Human Guardrails

A blended approach—AI draft, expert review—often beats full automation or full manual labor on both quality and speed.

The Takeaway

Expect world-class AI in narrow slices, steady gains across broader ground, and stubborn plateaus where data are scarce, stakes are high, or physics hasn’t yielded its terms of service. Winners will be the teams that know precisely which terrain they’re standing on and set goals—plus budgets—that match.

If your roadmap assumes uniform AI superpowers, rewrite it. The future isn’t a single wave; it’s a tide pool of surges and still waters. Navigate accordingly, and you’ll surf the peaks without capsizing in the troughs.