Today’s AI assistants have already changed how we gather information. Think of how quickly a tool like ChatGPT or Gemini can synthesize a report. But a new breed of AI agent is emerging that promises to fundamentally reshape knowledge work, business intelligence, and decision-making. These continuous agents won’t just wait for our questions; they’ll persistently work across sessions, proactively reach out with insights, and even collaborate with other AI agents on our behalf. For organizations, this shift could mean turning software from a passive tool into an active partner in decision support. The stakes are high: companies that harness these agents could see smarter, faster decisions and a lasting competitive edge, while those that don’t risk being left with yesterday’s information. As one recent executive survey found, over half of companies are already deploying autonomous AI agents in workflows, with another 35% planning to integrate them by 2027.

Why is this agent design shift so critical? Because knowledge work and business strategy thrive on up-to-date context and continuous learning. In dynamic fields, from market intelligence to supply chain management, facts on the ground change by the hour. Yet most of our AI tools behave like savants with short-term memory: they give a brilliant answer once and then go idle. Continuous agents, by contrast, promise an “always-on” intelligence, monitoring data streams, refining their understanding, and nudging us when something important happens. This could transform organizational decision-making from a periodic, manual exercise into a real-time collaborative dialogue between human leaders and their digital counterparts. The result is a future where insights don’t just flow when we ask, but arrive unprompted at just the right moment. For any executive trying to build a responsive, knowledge-driven organization, that is a game-changer.

Current State

To appreciate where we’re heading, let’s start with where we are. In the past year, deep research agents have become a staple in many professionals’ toolkits. These are AI agents (offered by companies like OpenAI, Google, Perplexity, and others) designed to do multi-step, in-depth researchon a user’s prompt and deliver a comprehensive, structured report. Unlike a standard chatbot that might give a quick answer, a deep research agent operates more like a tireless junior analyst.

Give it a complex question, for example, a detailed market analysis or a technical literature review, and it will spend several minutes autonomously browsing sources, reading and collating information, then produce a briefing that could pass for a human researcher’s work.

OpenAI’s “ChatGPT Deep Research” mode, for example, runs for 5 to 30 minutes per query, digging through web results and even analyzing uploaded documents, before returning an in-depth answer complete with citations and an executive summary. Early users have found the output almost like a briefing a human analyst might write, with well-organized sections and references that make fact-checking easier.

These agents don’t just answer questions, they investigate them.

How are businesses using deep research agents today?Primarily for tasks that require gathering and synthesizing information at speed and scale. Think of market intelligence reports, competitive analyses, technical research, or policy briefs that once took an analyst days, a deep research agent can crank out a first draft in a coffee break.

Instead of manually querying multiple sources, the analysts could rely on the agent to deliver up-to-the-minute findings and even summarize late-breaking developments, gaining an information edge in real time.

In academic and R&D settings, tools like Elicit and Perplexity’s research modes scan thousands of papers or patents and summarize key insights, accelerating the literature review process.

And inside organizations, these agents serve as on-demand report generators, a product manager might use one to gather customer feedback themes ahead of a strategy session, or an HR team might generate a quick best-practices brief on hybrid work policies.

Deep research agents excel at structured, in-depth tasks that benefit from combing through large volumes of data and presenting a coherent narrative.

For all their cleverness, today’s deep research agents are still fundamentally constrained in two ways. First, they are session-limited, essentially amnesic outside the context of the single research task you give them.

They do a thorough job in one go and even allow follow-up questions in the same chat, but each “research mission” is separate.

The agent isn’t remembering to check back in tomorrow with new findings; if you want an update next week, you have to run a new query.

OpenAI’s ChatGPT Deep Research, for instance, does not persistentlytrack a research project over multiple days; once it delivers the report, it waits for your next prompt (or goes idle).

Google’s equivalent (integrated in their Gemini AI assistant) similarly allows you to ask follow-ups in the moment and even save the output to a document, but it won’t continuously monitor the web for new information after producing its report.

These agents have no long-term memory or initiative beyond the task at hand.

Second, today’s research agents remain passive and user-initiated. They are fantastic at responding to a prompt with a deep dive, but onlyresponding. They don’t proactively ask clarifying questions before they begin (beyond perhaps a quick prompt for parameters), and they certainly don’t reach out unbidden with insights.

As an AI strategy blog put it recently, “most AI apps today… are still fundamentally reactive… only acting when triggered.” The agent won’t decide on its own that an ambiguous question needs more clarification; it will do its best, sometimes running into dead-ends or hallucinating if the instructions were vague.

It also won’t self-correct mid-way or change course unless a human intervenes. Once the task is complete, the system essentially shuts down; it doesn’t set new goals or keep going without further orders.

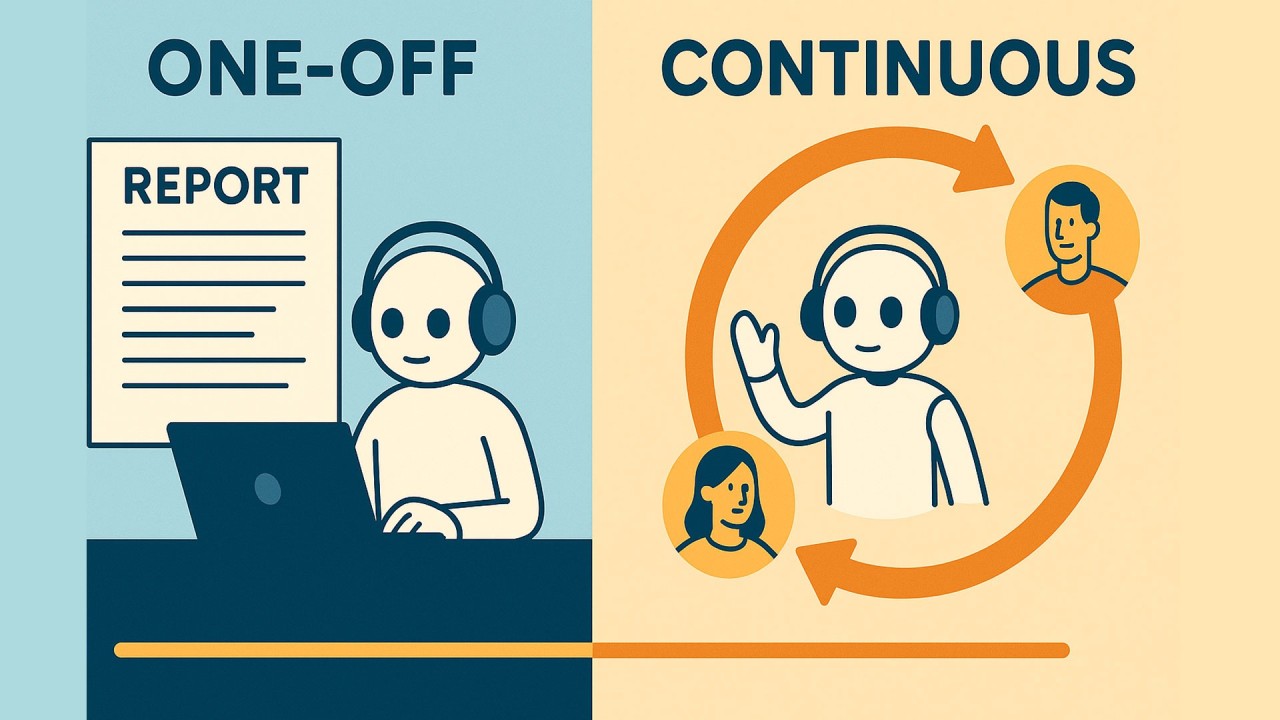

In enterprise terms, these tools are more like on-demand consultants rather than autonomous team members. They wait to be asked, deliver their report, and then go quiet.

These limitations have real implications. Imagine a market intelligence agent that gives you a great briefing today on competitor X. Tomorrow, competitor X launches a new product, but your agent isn’t going to ping you about it unless you specifically ask again. Yes, you can setup tasks in OpenAI, but it doesn’t allow you to do Deep Research.

Or consider an internal research agent that helped compile your company’s knowledge on a topic. If a week later some data changes or an error is discovered, the agent won’t proactively update the report; someone has to notice and run a new query.

I’ve mentioned numerous times that this “single-shot” style means valuable context can slip through the cracks. I recently used a deep research agent to compile an exhaustive guide on vehicle wheel and tire fitment, a niche domain requiring data from dozens of manufacturer spec sheets and forums. The agent delivered a detailed reference manual in minutes, a task that could have taken me days. But when a new car model was released shortly after (with a slightly different wheel specification) the agent wasn’t “watching” to incorporate that update. Nor did it ask me if I wanted ongoing tracking of new models. In my view, the agent was brilliant at gathering the data in the moment, but it behaved like a temporary contractor; once the initial job was done, it didn’t stick around to refine or maintain that knowledge base.

The strengths were clear (speed, thoroughness, the ability to parse arcane fitment data), but so were the gaps where a more persistent, conversational partner could have added value. It’s these gaps that the next generation of AI aims to fill.

Enter the Continuous Agent: Always-On, Context-Aware, and Proactive

Where deep research agents stop, continuous agents begin. A continuous agent is essentially an AI that never clocks out; it carries context forward, keeps goals in mind between sessions, and isn’t shy about initiating interactions. Instead of a one-off researcher, think of it as an autonomous collaborator or digital colleague. Its defining traits include,

Persistent memory and context

A continuous agent retains what it learns and builds on it over time. Rather than resetting after each chat, it remembers your goals, preferences, and the evolving state of the project. This means if you consult it next week, it knows what was discussed last week. Enterprise AI think-tanks emphasize that such agents maintain “long-term memory… resulting in persistent systems that serve as the foundation for organizational knowledge.”

In practical terms, this could mean an AI assistant that recalls past research you’ve done and can integrate new data into that existing analysis seamlessly. No more re-explaining context to the AI every single time: the agent knows the backstory.

Recent AI architectures are increasingly enabling this persistence: for example, using vector databases or extended context windows so that an agent can draw on a memory of previous interactions and documents.

The goal is a continuously learning agent that evolves its knowledge just as a human team member would, rather than a mayfly with a 4,000-token lifespan.

Proactive engagement and follow-ups

Continuous agents don’t wait passively for instructions. They initiate conversationswhen appropriate, whether it’s to ask for clarification, suggest a follow-up task, or alert you to relevant changes.

In contrast to reactive chatbots, a proactive agent “continuously monitors its environment, analyzes patterns, and anticipates needs.” For example, if you give a continuous agent a broad goal (“keep me updated on competitor X’s product launches”), it might periodically reach out: “Hey, it’s been a week and I spotted a press release from competitor X. Shall I summarize it for you?”

Or, within a single complex task, if your prompt is ambiguous, the agent might ask youa clarifying question before diving in blindly. This kind of back-and-forth makes the AI feel much more like a collaborator.

Advanced interactivityis a key part of this; an agent that carries the conversation forward on its own. As one AI engineer noted, a truly interactive system would “ask follow-up questions, make personalized recommendations, and adapt based on user responses,” rather than just outputting an answer and falling silent.

Imagine an agent that doesn’t just answer your query about quarterly sales, but follows up with: “Would you like to know which product lines drove that change?” that’s the shift from reactive to proactive.

Continuous goal pursuit and learning

A continuous agent can operate in an ongoing loop of perception, reasoning, and action. It’s not limited to a single prompt-response cycle. Instead, it can be tasked with longer-term objectives and adjust its course as needed.

This might involve monitoring new information streams (news, databases, internal metrics) and updating its outputs accordingly without being explicitly asked each time. For instance, such an agent working on market intelligence might run daily web searcheson pre-defined topics and only notify you when something significant turns up, essentially acting as an autonomous research analyst on your team.

In technical terms, researchers describe this as agents that “stay alive and persist across sessions, trigger actions autonomously, and refine execution in real time.” We’re starting to see prototypes: think of experimental frameworks like AutoGPT or BabyAGI, where the AI can iteratively generate new tasks for itself based on a high-level goal.

While early versions of these have been brittle (often going off track or needing supervision), they illustrate what’s possible; an AI that doesn’t need hand-holding for each step, but can continuously loop through plan, act, observe, and re-plan.

Collaboration with other agents (multi-agent systems)

One exciting aspect of continuous agents is that they don’t have to work alone. Just as complex business projects are handled by teams of people with different specialties, AI agents can increasingly team up, each handling part of a problem and exchanging results.

These multi-agent setups can tackle problems no single monolithic model could manage as effectively. For example, in an always-on scenario monitoring a domain like supply chain disruptions, you might have one agent specialized in scanning news and social feeds for incident reports, another agent analyzing the company’s inventory data for vulnerabilities, and a third agent synthesizing these inputs into an actionable alert or strategy.

Research on such multi-agent LLM systems has exploded in the last year; one survey showed a sharp rise in papers on LLM-based multi-agent collaboration.

“Specialized LLM agents” working as a “dream team”can combine their strengths to solve complex, evolving tasks. One agent might gather raw data, another interprets it, a third makes a recommendation.

In a continuous setup, these agents could be running 24/7, handing off tasks to each other and notifying human stakeholders only when needed. We’re essentially talking about an AI workforce, not replacing humans, but handling the drudgery and micro-monitoring so humans can focus on high-level decisions.

In summary, a continuous agent is contextually aware, self-updating, and capable of both cooperation and initiative.

It’s the difference between a smart research assistant who waits for your email, and an autonomous analyst who sits in on your team meetings, speaks up with relevant insights, and takes on tasks on their own accord.

As one Microsoft leader described, these agents act as “persistent digital teammates” that learn from past interactions and retain institutional knowledge over time. And importantly, their presence can flip the workflow of knowledge work: rather than you having to remember to ask the AI, the AI remembers what to watch for and asks you if something should be done.

Preparing for the Continuous Agent Era

As continuous AI agents move from R&D labs and beta products into mainstream business use, leaders need to consider how this will reshape roles, workflows, and competitive strategy. Here are several high-level implications and recommendations.

Redefining Roles and Workforce Collaboration

Rather than eliminating jobs, continuous agents will augment and redefine many roles. Routine analytical or coordination tasks handled by agents mean human roles can shift to higher-level focus.

For example, a business analyst who used to spend 70% of her time gathering data and making reports might, with an AI agent doing that legwork, transition to a role more like an AI editor and strategist, validating the agent’s findings, asking it the right questions, and then applying judgment to the insights.

Similarly, project managers might rely on agents to track project metrics and nudge team members on deadlines, freeing the managers to focus on stakeholder communication and risk management.

The concept of a “human-AI team” will become the norm. In such teams, humans define the strategy and set goals, while agents handle execution and detailed analysis.

Companies should start training staff to work alongside AI, treating agents as collaborators. This means developing skills in prompt engineering, result interpretation, and oversight.

It also means adjusting performance metrics: if an agent is doing part of an employee’s job, how do you evaluate and credit that? HR policies and job descriptions will need updating to clarify how humans and AI share responsibilities.

The organizations that navigate this symbiosis well will see big productivity boosts without alienating their workforce. After all, as one industry commentator noted, “the real power of autonomous agents lies not in replacing human judgment but in elevating it,” by taking on the grunt work and letting people focus on vision and innovation.

Rethinking Workflows and Processes

Continuous agents will force a rethink of many business processes. Workflows that traditionally involved waiting for periodic reports or manual checkpoints can be redesigned for continuous monitoring and intervention.

Take incident management: today a team might review security alerts once a day, but with an agent on watch 24/7, the process might flip to real-time triage. Decision cycles can shorten: weekly meetings might become bi-daily syncs because the inputs (via agents) update more frequently.

Leaders should map out where having an “always-on” AI might significantly change the cadence of work. Some questions to ask: If we had up-to-the-minute insight on X at all times, how would we change our decision process? What could we do sooner or more often?

Also, consider new processes entirely enabled by agents. For instance, customer feedback loops: an agent could continuously analyze customer interactions (calls, chats) for pain points and automatically create tickets for product improvements, which product teams then address in agile fashion.

That’s a workflow that might not have been feasible before AI agents. Embracing continuous agents may also mean adopting a more agile, iterative approach in general; since the AI can adjust course quickly, teams may experiment more and adapt plans on the fly with the agent feeding them intelligence.

Governance, Oversight and Trust Architecture

If software is going to initiate actions and reach out to employees or customers on its own, trust and safety mechanismsmust be in place. It’s a bit like moving from cruise control to self-driving: you need new guardrails.

Businesses should establish clear rules for what agents are allowed to do autonomouslyversus when human approval is required. For example, an agent might be permitted to automatically reorder inventory up to a certain cost, but above that threshold it must get sign-off. Or a marketing agent might tweak bids on ads, but not create new ad copy without review.

Putting these policies in place will prevent unwanted surprises. Additionally, as agents persist and accumulate knowledge, data governance becomes critical.

Agents will be privy to potentially sensitive historical information, so controls on who (or what) can query the agent, how it anonymizes data in outputs, and how it forgets or masks stale sensitive info, are important to design from the outset.

Tech leaders talk about the need for a “trust architecture”around agents, encompassing security, privacy, and reliability measures.

Concretely, this could involve audit logs of agent decisions, “AI ethics” review boards in the company to evaluate agent behavior, and stress-testing agents for bias or error propagation before wide deployment.

Continuous agents will only be as useful as they are trusted by your team; if people don’t trust the AI’s suggestions or worry it’s operating opaquely, they’ll circumvent it. Thus, transparency (the agent explaining why it did something) will be a key feature to demand from vendors or build into internal solutions.

We may even see the rise of roles like “AI Auditor” or “Agent Controller:” individuals responsible for regularly reviewing agent outputs and ensuring they meet standards.

Competitive and Strategic Opportunities

On a higher plane, executives should ask: how could continuous AI agents fundamentally change our business model or competitive landscape?Just as the internet or mobile did in previous eras, this technology can enable new offerings or threats.

For instance, could you offer your customers a persistent AI advisor as part of your product (for example, a financial app that includes an AI wealth coach that continuously learns the user’s goals)?

If so, that might deepen customer engagement. Or think about strategy formation: companies might start running AI-driven scenario simulators continuously in the background to inform long-term planning (some hedge funds are likely already doing something similar for trading strategies).

There’s also the question of competitive intelligence: if your competitors are using continuous agents and you are not, might they spot market shifts faster or react more quickly to customer needs? On the flip side, being an early mover with agents could differentiate your services.

For example, an e-commerce company that deploys proactive customer service agents (which reach out to customers to resolve issues before the customer contacts support) could boast significantly higher customer satisfaction.

These are the sorts of strategic angles leaders should brainstorm now. The cost of entry for deploying AI agents is dropping, so competitive barriers won’t be primarily about access to the tech; it will be about how creatively and effectively you use it in your business processes.

Change Management and Culture

Last but not least, preparing the organization culturally is crucial. There may be initial skepticism or fear among staff (“Will the AI take my job?” or “Can I trust its work?”).

Transparent communication about whythe company is adopting continuous agents and how people’s roles will shift is important to get buy-in.

Involve employees in pilot projects so they feel part of the change and can provide input on the agent’s performance. Upskilling programs can help employees develop AI literacy; for instance, training on how to effectively “coach” the agents and interpret their results.

Companies that treat the rollout of AI agents as a strategic initiative with executive sponsorship, rather than a pure IT experiment, will fare better. As with any major change, it helps to celebrate quick wins: share stories internally of how the new agent saved someone time or uncovered a valuable insight.

This turns the narrative from “AI as threat” to “AI as teammate,”which is the mindset needed to truly leverage these tools. When done right, the organization can develop a culture that embraces data-driven, continuous improvement (since the agents will constantly be surfacing improvement opportunities).

In fact, one could say that continuous agents will thrive best in a culture that also values continuous learning for humans: the two go hand in hand.

So, Are You Ready For AI That Reaches Out To You?

The evolution from deep research agents to continuous agents marks a profound shift in how we interact with technology. We’re moving from a world where we go to software with a question, to one where software comes to us with answers (and questions!) we didn’t even know we needed.

This could lead to unprecedented levels of proactivity and intelligence in our organizations, but it also challenges us to rethink our roles, our trust in machines, and our readiness for constant change.

As you finish reading this, somewhere an AI agent may already be piecing together data that will influence tomorrow’s decisions.

The provocative question for all of us, especially those in leadership, is: Are we ready for a world where software reaches out to us, not the other way around?

The companies that say “yes,” and plan accordingly, will be the ones writing the next chapter of business in the age of autonomous agents. The age of always-on, context-savvy AI is dawning; now is the time to decide whether you’ll lead that change or scramble to catch up.

The continuous agents are coming online, and they just might change how we work, forever.